http://delivery.acm.org/10.1145/1360000/1357229/p1121-gill.pdf?key1=1357229&key2=3377561721&coll=ACM&dl=ACM&CFID=86785888&CFTOKEN=56499296

Comments:

none yet

Summary:

The article presented a study done on how well people can perceive emotion with just plain text. The researchers recruited subjects to analyze some text and mark which emotions were put into the text. Some subjects were naive and some were considered experts at examining the text. The goal was to determine what qualities in the text would help the naive reader analyze it closer to what the expert reader may see.

They found that the best indicator of how close the naive reader would be is the length of the text. Shorter texts presented more challenges to the naive reader, presumably due to the smaller amount of clues about the writers emotions. The researchers also noted that naive readers also were better suited to matching with the experts on joyful emotions.

Discussion:

This study seemed a little boring to read however I get the feeling that it was only boring because it was clearly a preliminary study. The researchers are most likely looking at creating an automated system to analyze texts form for example the blogosphere, twitter, Facebook, or any other social application. To this end, this study would be very useful. An automated system would provide some of the best feedback to companies they could wish to get.

If I am right in guessing that the goal is to eventually create such a service I would be very supportive of their goal. I feel that something like this, while difficult, is somewhat overdue. It would be really nice to see this as a solved problem.

Sunday, April 18, 2010

Friday, April 16, 2010

“Human-Currency Interaction”: Learning from Virtual Currency Use in China, Assigned CHI '08

http://delivery.acm.org/10.1145/1360000/1357059/p25-wang.pdf?key1=1357059&key2=4052441721&coll=ACM&dl=ACM&CFID=84571263&CFTOKEN=98591662

Yang Wang, University of California

Scott D. Mainwaring, Intel Research

Comments:

Nate Brown

Summary:

This paper outlined a study conducted on virtual money in China. The researchers interviewed 50 gamers about their experiences with virtual money. They wanted to explore the relations between virtual money and real life money and get a better insight into how people perceived virtual money.

With virtual money, eg WOW money, Q points, xbox live marketplace points, gaining a significant foothold into the gaming community it is important to understand the impacts that it has on the culture of the gaming community. In China a service called QQ is very large and offers things called Q points. The researchers, when interviewing their subjects, found that many young gamers regard Q points to be just as high as the standard real life currency. Some however, had a varied view. One respondent mentioned that while online money attempts to distance itself from real money, there is no real difference. When we spend virtual money we are still spending real money.

The researchers also looked into how gamers transfer this virtual money from one player to another. They found that many players didn't trust the internet infrastructure and therefore preferred face to face character transactions. The players would meet in real life and then sitting next to each other they would conduct the avatar transfer. While this may seem to be a negative side effect of an poor internet infrastructure, the researchers found that many gamers found this to be fun and exciting.

Discussion:

I think the study brought up some valid points but on a whole it didn't really tell me anything I didn't already know. I was really shocked at the story of the man who went to meet with a gamer to buy some stuff from him for 400 USD and was shocked to find that the other gamer was an 11 or 12 year old. I just find it hard to believe that people can get into these games that much. The feeling I got from this paper was that with these self contained virtual currencies it just makes it easier for MMO addicts to further withdraw themselves from the real world.

The paper only mentioned once that the community needed to make sure that a lot of care was taken to ensure that people were not being taken advantage of however I think more research should go into that sort of field. Many people fail to see that when they spend virtual currency they are really spending real money with a different label. We need to find some way to make this more apparent to gamers as a whole.

Yang Wang, University of California

Scott D. Mainwaring, Intel Research

Comments:

Nate Brown

Summary:

This paper outlined a study conducted on virtual money in China. The researchers interviewed 50 gamers about their experiences with virtual money. They wanted to explore the relations between virtual money and real life money and get a better insight into how people perceived virtual money.

With virtual money, eg WOW money, Q points, xbox live marketplace points, gaining a significant foothold into the gaming community it is important to understand the impacts that it has on the culture of the gaming community. In China a service called QQ is very large and offers things called Q points. The researchers, when interviewing their subjects, found that many young gamers regard Q points to be just as high as the standard real life currency. Some however, had a varied view. One respondent mentioned that while online money attempts to distance itself from real money, there is no real difference. When we spend virtual money we are still spending real money.

The researchers also looked into how gamers transfer this virtual money from one player to another. They found that many players didn't trust the internet infrastructure and therefore preferred face to face character transactions. The players would meet in real life and then sitting next to each other they would conduct the avatar transfer. While this may seem to be a negative side effect of an poor internet infrastructure, the researchers found that many gamers found this to be fun and exciting.

Discussion:

I think the study brought up some valid points but on a whole it didn't really tell me anything I didn't already know. I was really shocked at the story of the man who went to meet with a gamer to buy some stuff from him for 400 USD and was shocked to find that the other gamer was an 11 or 12 year old. I just find it hard to believe that people can get into these games that much. The feeling I got from this paper was that with these self contained virtual currencies it just makes it easier for MMO addicts to further withdraw themselves from the real world.

The paper only mentioned once that the community needed to make sure that a lot of care was taken to ensure that people were not being taken advantage of however I think more research should go into that sort of field. Many people fail to see that when they spend virtual currency they are really spending real money with a different label. We need to find some way to make this more apparent to gamers as a whole.

Thursday, April 15, 2010

Relating Documents via User Activity: The Missing Link, IUI '08

http://delivery.acm.org/10.1145/1380000/1378837/p389-pedersen.pdf?key1=1378837&key2=3852731721&coll=ACM&dl=ACM&CFID=81639924&CFTOKEN=12013848

Comments:

Aaron Loveall

Summary:

The researchers created a desktop sidebar tool that they called Ivan. The goal of Ivan was to help a user better access documents and files that were related to the one they were currently viewing. Living on a desktop sidebar Ivan monitors which windows are open at the current time and will display suggested documents that might be similar or somehow associated.

The idea is that many users often have trouble figuring out the proper location to put a file in and many times, when they hastily stow it away somewhere, they have trouble relocating the file. With Ivan this becomes irrelevant because the system should know that when a user opens a certain file they might also want another one and suggest these elusive files to the user.

Ivan operates by spying on the interactions between the file system and the individual open windows. This data allows Ivan to compile a list of related views for future display. Ivan doesn't know about anything that is going on inside the windows however.

Conclusion:

Personally I don't feel that I would get a tremendous amount of use from Ivan. I, and I would assume most other Computer Scientists, take great pains to keep out file systems very neatly organized. For many of our less technical peers however, Ivan could be of great assistance. I see it being the most useful in a business setting where for example you are on the phone speaking about a specific topic and need to open a document on the topic quickly. If you have something else about that topic open Ivan should already have the information in front of you.

This would be a good feature to include with an operating system. It would integrate well and be a nice selling point feature. I would encourage the researchers to see if Microsoft would want to include this in Windows 8.

Comments:

Aaron Loveall

Summary:

The researchers created a desktop sidebar tool that they called Ivan. The goal of Ivan was to help a user better access documents and files that were related to the one they were currently viewing. Living on a desktop sidebar Ivan monitors which windows are open at the current time and will display suggested documents that might be similar or somehow associated.

The idea is that many users often have trouble figuring out the proper location to put a file in and many times, when they hastily stow it away somewhere, they have trouble relocating the file. With Ivan this becomes irrelevant because the system should know that when a user opens a certain file they might also want another one and suggest these elusive files to the user.

Ivan operates by spying on the interactions between the file system and the individual open windows. This data allows Ivan to compile a list of related views for future display. Ivan doesn't know about anything that is going on inside the windows however.

Conclusion:

Personally I don't feel that I would get a tremendous amount of use from Ivan. I, and I would assume most other Computer Scientists, take great pains to keep out file systems very neatly organized. For many of our less technical peers however, Ivan could be of great assistance. I see it being the most useful in a business setting where for example you are on the phone speaking about a specific topic and need to open a document on the topic quickly. If you have something else about that topic open Ivan should already have the information in front of you.

This would be a good feature to include with an operating system. It would integrate well and be a nice selling point feature. I would encourage the researchers to see if Microsoft would want to include this in Windows 8.

Wednesday, April 14, 2010

Who, What, Where & When: A New Approach to Mobile Search, IUI '08

http://delivery.acm.org/10.1145/1380000/1378817/p309-church.pdf?key1=1378817&key2=3643821721&coll=ACM&dl=ACM&CFID=81639924&CFTOKEN=12013848

Comments:

Summary:

The paper talks about a prototype for a new form of a mobile search engine. It argues that mobile device users, due to typing difficulties, will always provide short and vague search terms on their mobile devices. This means that the old searching model will become less capable of returning desired results. To rectify this issue, the researchers explain that more context sensitive data should be included with the search. Currently Google and Yahoo's mobile search takes into account the users location however, much more can be done.

The researchers created their own prototype for a search engine which takes into account more context sensitive data. Their search engine utilizes past queries to help make generalizations about the users preferences. The results page is a dynamic display based on this information.

Comments:

Summary:

The paper talks about a prototype for a new form of a mobile search engine. It argues that mobile device users, due to typing difficulties, will always provide short and vague search terms on their mobile devices. This means that the old searching model will become less capable of returning desired results. To rectify this issue, the researchers explain that more context sensitive data should be included with the search. Currently Google and Yahoo's mobile search takes into account the users location however, much more can be done.

The researchers created their own prototype for a search engine which takes into account more context sensitive data. Their search engine utilizes past queries to help make generalizations about the users preferences. The results page is a dynamic display based on this information.

As the figure shows the results are very dependent on physical location and previous searches. The queries are shown by yellow markers where the results are shown by red markers. The two sliders at the bottom will also affect the results of the qurery. The top slider is based on time going from earlier to now. This will base results on when queires were made. The second slider goes from broad to specific, modifying how much the results are affected by past queries and the search engines guesses as to the users preferences.

Discussion:

I think something like this could either be really cool or really annoying. The biggest problem with it is that it will guess wrong so many times people will always only use the now and specific slider positions, essentially rendering the added features useless.

I have noticed a little bit of difficulty in getting good results from broad terms on my phone and do see a need for some improvement. The locational features used by Google is really nice and I have seen a lot of use from that. It only seems natural to add more context sensitive data to the results we currently recieve. Hopefully this will be translated into something great and usefull.

Tuesday, April 13, 2010

EMG-based Hand Gesture Recognition, Assigned IUI '08

http://delivery.acm.org/10.1145/1380000/1378778/p30-kim.pdf?key1=1378778&key2=3542811721&coll=portal&dl=ACM&CFID=85842108&CFTOKEN=36893441

Authors

Jonghwa Kim, Stephan Mastnik, Elisabeth André

Augsburg, Germany

Summary:

This article outlined a EMG-based hand gesture control for an RC car. They used three nodes placed on the users arm to recieve EMG input from the user based on the users gesture movement.

The user would use hand gestures to tell the car to go fowards(gesture 1), right(gesture 2), left(gesture 3), or to rest(gesture 4) .

The user would recieve training on how to use the device before they were given the chance to actually use it. The motions would be recieved and then processed by a middle man before it was sent to the RC car.

The researchers, in a user study with 30 subjects, found that the users were able to quickly become very good at controling the car using this type of control system. It is important to note that the users did recieve training on how to use the gestures and on proper movements to achieve better results. It is explained later in the paper that while this training is not ideal, a good system using training is a step in the direction of not needing any training.

Discussion:

On a level of one to cool this thing is awesome. Just the theory of being able to control something with electrical pulses from your brain is very intriguing. I would be worried about depolying a system like this in the real world for fear of electrical noise but it seems like an interesting idea, assuming they can limit the effects of the electrical noise.

I was really surprised that the users were able to catch on as quickly as they were. When looking at a system like this that is seemingly so new we wouldn't expect such a quick catch on. On the other side however, the gestures are much more natural than a joystick so that might have helped.

I am looking forward to controlling my personal assistant robot with my mind so I would highly suggest that the researchers continue with their work in this field.

Authors

Jonghwa Kim, Stephan Mastnik, Elisabeth André

Augsburg, Germany

Summary:

This article outlined a EMG-based hand gesture control for an RC car. They used three nodes placed on the users arm to recieve EMG input from the user based on the users gesture movement.

The user would use hand gestures to tell the car to go fowards(gesture 1), right(gesture 2), left(gesture 3), or to rest(gesture 4) .

The user would recieve training on how to use the device before they were given the chance to actually use it. The motions would be recieved and then processed by a middle man before it was sent to the RC car.

The researchers, in a user study with 30 subjects, found that the users were able to quickly become very good at controling the car using this type of control system. It is important to note that the users did recieve training on how to use the gestures and on proper movements to achieve better results. It is explained later in the paper that while this training is not ideal, a good system using training is a step in the direction of not needing any training.

Discussion:

On a level of one to cool this thing is awesome. Just the theory of being able to control something with electrical pulses from your brain is very intriguing. I would be worried about depolying a system like this in the real world for fear of electrical noise but it seems like an interesting idea, assuming they can limit the effects of the electrical noise.

I was really surprised that the users were able to catch on as quickly as they were. When looking at a system like this that is seemingly so new we wouldn't expect such a quick catch on. On the other side however, the gestures are much more natural than a joystick so that might have helped.

I am looking forward to controlling my personal assistant robot with my mind so I would highly suggest that the researchers continue with their work in this field.

Multi-touch Interaction for Robot Control, IUI 09

http://delivery.acm.org/10.1145/1510000/1502712/p425-micire.pdf?key1=1502712&key2=3140811721&coll=ACM&dl=ACM&CFID=81639924&CFTOKEN=12013848

Comments:

Patrick Webster

Summary:

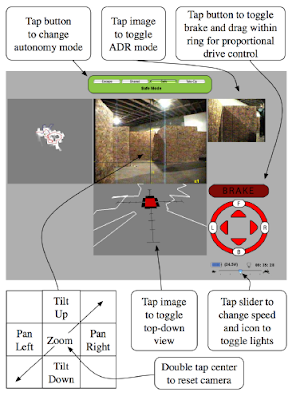

This paper described a new control device for robots that the researchers had created. The device was new in that it was a multi touch tablet. This allowed for an entirely new method of controlling the robot. Most robot control is done using joysticks and sliders. While easy to use these devices provide a relatively small axis of control for the robots. The biggest drawback however, is that they are no dynamic and able to adjust to the differing needs of many robots. The robots themselves must adjust to the control scheme.

The display, as shown to the left, featured many usefull screens for the user. The touch screen was taken into account in many ways, but most notably in camera control. To move and zoom the camera al the user had to do was to double tap on part of the main control image. This would refocus the camera on the selected reigon and present a 2x focus. If the user wanted to revert back to the origional view, he/she would simply double tap the image again.

In their user study they found that people would all interact with the device differently. Many people would attempt to manipulate the robot differently using differing degrees of power in their presses. Many, as was noted, attempted to harness the multitouch capabilities in manners that the designers hadn't anticipated. This caused undesireable behaviors at times.

Conclusion:

It seems like the most important discovery in this paper, while the robot control is very cool, is how most users attempted to interact with the device in ways that the designers didn't even anticipate. Because of this, UI designers will have to greatly revamp how they impliment the user interface. Currently our interfaces are implimented to handle specific sequences and nothing else. With multi touch screens however, there are nearly infinite ways to interact with the interface. To aid in the users enjoyment, we should begin to look at ways to design the interface where we set some base rules and then we find some way to apply any form of interaction to those base rules. This will help us handle more rules with less code.

Comments:

Patrick Webster

Summary:

This paper described a new control device for robots that the researchers had created. The device was new in that it was a multi touch tablet. This allowed for an entirely new method of controlling the robot. Most robot control is done using joysticks and sliders. While easy to use these devices provide a relatively small axis of control for the robots. The biggest drawback however, is that they are no dynamic and able to adjust to the differing needs of many robots. The robots themselves must adjust to the control scheme.

The display, as shown to the left, featured many usefull screens for the user. The touch screen was taken into account in many ways, but most notably in camera control. To move and zoom the camera al the user had to do was to double tap on part of the main control image. This would refocus the camera on the selected reigon and present a 2x focus. If the user wanted to revert back to the origional view, he/she would simply double tap the image again.

In their user study they found that people would all interact with the device differently. Many people would attempt to manipulate the robot differently using differing degrees of power in their presses. Many, as was noted, attempted to harness the multitouch capabilities in manners that the designers hadn't anticipated. This caused undesireable behaviors at times.

Conclusion:

It seems like the most important discovery in this paper, while the robot control is very cool, is how most users attempted to interact with the device in ways that the designers didn't even anticipate. Because of this, UI designers will have to greatly revamp how they impliment the user interface. Currently our interfaces are implimented to handle specific sequences and nothing else. With multi touch screens however, there are nearly infinite ways to interact with the interface. To aid in the users enjoyment, we should begin to look at ways to design the interface where we set some base rules and then we find some way to apply any form of interaction to those base rules. This will help us handle more rules with less code.

Subscribe to:

Comments (Atom)